45 learning with less labels

[2201.02627v1] Learning with less labels in Digital Pathology via ... [Submitted on 7 Jan 2022] Learning with less labels in Digital Pathology via Scribble Supervision from natural images Eu Wern Teh, Graham W. Taylor A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts. Machine learning with less than one example - TechTalks A new technique dubbed "less-than-one-shot learning" (or LO-shot learning), recently developed by AI scientists at the University of Waterloo, takes one-shot learning to the next level. The idea behind LO-shot learning is that to train a machine learning model to detect M classes, you need less than one sample per class.

Pro Tips: How to deal with Class Imbalance and Missing Labels Any of these classifiers can be used to train the malware classification model. Class Imbalance. As the name implies, class imbalance is a classification challenge in which the proportion of data from each class is not equal. The degree of imbalance can be minor, for example, 4:1, or extreme, like 1000000:1.

Learning with less labels

Better reliability of deep learning classification models by exploiting ... One way to establish such a check, is to use information from the hierarchical structure of the labels, often seen in public datasets (CIFAR-10, Image-Net). The goal is to build a second model, trained on the parent labels in the hierarchy and this post will show why this increases the reliability of models and at what cost. A flat label structure Learning with Less Labels and Imperfect Data | MICCAI 2020 - hvnguyen This workshop aims to create a forum for discussing best practices in medical image learning with label scarcity and data imperfection. It potentially helps answer many important questions. For example, several recent studies found that deep networks are robust to massive random label noises but more sensitive to structured label noises. Simplified Transfer Learning for Chest Radiography Models ... Jul 19, 2022 · Background Developing deep learning models for radiology requires large data sets and substantial computational resources. Data set size limitations can be further exacerbated by distribution shifts, such as rapid changes in patient populations and standard of care during the COVID-19 pandemic. A common partial mitigation is transfer learning by pretraining a “generic network” on a large ...

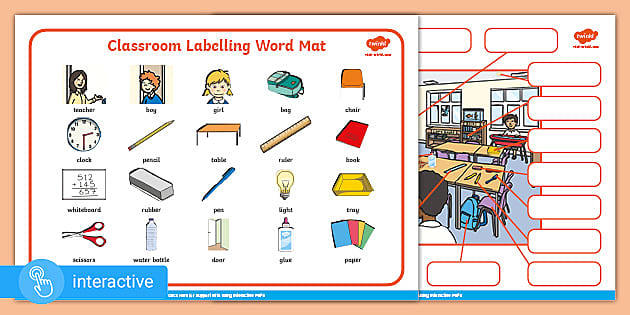

Learning with less labels. Learning Labels - A System to Manage and Track Skills: Map Learning in ... Learning labels (Skills Label TM) is a system to manage and track skills. This includes defining learning in skills, career in skills, and creating effective pathways. The online application includes all this functionality and more. The paper introduces the key themes / ideas, current functionality, and future vision. Free Classroom Labels Teaching Resources | Teachers Pay Teachers Browse free classroom labels resources on Teachers Pay Teachers, a marketplace trusted by millions of teachers for original educational resources. Browse Catalog. Grade Level. Pre-K - K; 1 - 2; 3 - 5; ... Organise all of your teaching resources, classroom supplies, learning games, literacy rotations and so much more with these EDITABLE and ... Learning with Less Labels Imperfect Data | Hien Van Nguyen Methods such as one-shot learning or transfer learning that leverage large imperfect datasets and a modest number of labels to achieve good performances Methods for removing rectifying noisy data or labels Techniques for estimating uncertainty due to the lack of data or noisy input such as Bayesian deep networks Printable Classroom Labels for Preschool - Pre-K Pages This printable set includes more than 140 different labels you can print out and use in your classroom right away. The text is also editable so you can type the words in your own language or edit them to meet your needs. To attach the labels to the bins in your centers, I love using the sticky back label pockets from Target.

The switch Statement (The Java™ Tutorials > Learning the Java ... Deciding whether to use if-then-else statements or a switch statement is based on readability and the expression that the statement is testing. An if-then-else statement can test expressions based on ranges of values or conditions, whereas a switch statement tests expressions based only on a single integer, enumerated value, or String object. Less Labels, More Efficiency: Charles River Analytics Develops New ... Charles River Analytics Inc., developer of intelligent systems solutions, has received funding from the Defense Advanced Research Projects Agency (DARPA) as part of the Learning with Less Labels program. This program is focused on making machine-learning models more efficient and reducing the amount of labeled data required to build models. LwFLCV: Learning with Fewer Labels in Computer Vision This special issue focuses on learning with fewer labels for computer vision tasks such as image classification, object detection, semantic segmentation, instance segmentation, and many others and the topics of interest include (but are not limited to) the following areas: • Self-supervised learning methods • New methods for few-/zero-shot learning Darpa Learning With Less Label Explained - Topio Networks The DARPA Learning with Less Labels (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data needed to build the model or adapt it to new environments. In the context of this program, we are contributing Probabilistic Model Components to support LwLL.

Learning Nutrition Labels - Eat Smart, Move More, Weigh Less The label above indicates that there are 250 calories per serving, which is one cup. If we ate 1 ½ cups of this food, we would get 250 + 125 calories for a total of 375 calories. Pay close attention to serving sizes as they are critical to managing calories and body weight. Limit the nutrients highlighted in yellow, which are nutrients ... No labels? No problem!. Machine learning without labels using… | by ... Machine learning without labels using Snorkel Snorkel can make labelling data a breeze There is a certain irony that machine learning, a tool used for the automation of tasks and processes, often starts with the highly manual process of data labelling. Learning about Labels | Share My Lesson Learning about Labels GLSEN's No Name-Calling Week. Subject Health and Wellness — Mental, Emotional and Social Health Grade Level Grades 6-12, Paraprofessional and School Related Personnel, ... Students will gain an understanding labels and attached stereotypes. They will also gain an understanding of seeing someone as a whole person and will ... Learning in Spite of Labels Paperback - December 1, 1994 Learning in Spite of Labels Paperback - December 1, 1994 by Joyce Herzog (Author) 6 ratings Kindle $7.50 Read with Our Free App Paperback $9.59 31 Used from $2.49 1 New from $22.10 All children can learn. It is time to stop teaching subjects and start teaching children!

subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2017 - Learning with Auxiliary Less-Noisy Labels. 2018-AAAI - Deep learning from crowds. 2018-ICLR - mixup: Beyond Empirical Risk Minimization. 2018-ICLR - Learning From Noisy Singly-labeled Data. 2018-ICLR_W - How Do Neural Networks Overcome Label Noise?. 2018-CVPR - CleanNet: Transfer Learning for Scalable Image Classifier Training with Label ...

Labeling with Active Learning - DataScienceCentral.com As in human-in-the-loop analytics, active learning is about adding the human to label data manually between different iterations of the model training process (Fig. 1). Here, human and model each take turns in classifying, i.e., labeling, unlabeled instances of the data, repeating the following steps. Step a -Manual labeling of a subset of data.

Learning With Auxiliary Less-Noisy Labels - PubMed Instead, in real-world applications, less-accurate labels, such as labels from nonexpert labelers, are often used. However, learning with less-accurate labels can lead to serious performance deterioration because of the high noise rate.

Printable Dramatic Play Labels - Pre-K Pages I'm Vanessa, I help busy Pre-K and Preschool teachers plan effective and engaging lessons, create fun, playful learning centers, and gain confidence in the classroom. As a Pre-K teacher with more than 20 years of classroom teaching experience, I'm committed to helping you teach better, save time, stress less, and live more.

Bilingual Classroom Labels from ZoeCohen on TeachersNotebook.com (8 pages) | Classroom labels ...

Learning With Less Labels - YouTube About Press Copyright Contact us Creators Advertise Developers Terms Privacy Policy & Safety How YouTube works Test new features Press Copyright Contact us Creators ...

Learning With Less Labels (lwll) - mifasr The Defense Advanced Research Projects Agency will host a proposer's day in search of expertise to support Learning with Less Label, a program aiming to reduce amounts of information needed to train machine learning models. The event will run on July 12 at the DARPA Conference Center in Arlington, Va., the agency said Wednesday.

Learning with Less Labels in Digital Pathology via Scribble Supervision ... Learning with Less Labels in Digital Pathology via Scribble Supervision from Natural Images Wern Teh, Eu ; Taylor, Graham W. A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts.

Learning To Read Labels :: Diabetes Education Online Remember, when you are learning to count carbohydrates, measure the exact serving size to help train your eye to see what portion sizes look like. When, for example, the serving size is 1 cup, then measure out 1 cup. If you measure out a cup of rice, then compare that to the size of your fist.

Introduction to Semi-Supervised Learning - Javatpoint Semi-supervised learning is an important category that lies between the Supervised and Unsupervised machine learning. Although Semi-supervised learning is the middle ground between supervised and unsupervised learning and operates on the data that consists of a few labels, it mostly consists of unlabeled data.

GitHub - weijiaheng/Advances-in-Label-Noise-Learning: A ... Jun 15, 2022 · Contrast to Divide: Self-Supervised Pre-Training for Learning with Noisy Labels. Exponentiated Gradient Reweighting for Robust Training Under Label Noise and Beyond. Understanding the Interaction of Adversarial Training with Noisy Labels. Learning from Noisy Labels via Dynamic Loss Thresholding.

Learning with Less Labeling (LwLL) | Zijian Hu The Learning with Less Labeling (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data required to build a model by six or more orders of magnitude, and by reducing the amount of data needed to adapt models to new environments to tens to hundreds of labeled examples.

Less Labels, More Learning | AI News & Insights Less Labels, More Learning Machine Learning Research Published Mar 11, 2020 Reading time 2 min read In small data settings where labels are scarce, semi-supervised learning can train models by using a small number of labeled examples and a larger set of unlabeled examples. A new method outperforms earlier techniques.

BRIEF - Occupational Safety and Health Administration “Warning” is used for the less severe hazards. There will only be one signal word on the label no matter how many hazards a chemical may have. If one of the hazards warrants a “Danger” signal word and another warrants the signal word “Warning,” then only “Danger” should appear on the label. • Hazard Statements describe the nature

Tags - DARPA The Learning with Less Labeling (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data required to build a model by six or more orders of magnitude, and by reducing the amount of data needed to adapt models to new environments to tens to hundreds of labeled examples.

Human activity recognition: learning with less labels and ... - SPIE First, I will present our Uncertainty-aware Pseudo-label Selection (UPS) method for semi-supervised learning, where the goal is to leverage a large unlabeled dataset alongside a small, labeled dataset. Next, I will present self-supervised method, TCLR: Temporal Contrastive Learning for Video Representations, which does not require labeled data.

Learning with Less Labels in Digital Pathology via Scribble Supervision ... Learning with Less Labels in Digital Pathology via Scribble Supervision from Natural Images 7 Jan 2022 · Eu Wern Teh , Graham W. Taylor · Edit social preview A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts.

Learning with Less Labels (LwLL) - Federal Grant Learning with Less Labels (LwLL) The summary for the Learning with Less Labels (LwLL) grant is detailed below. This summary states who is eligible for the grant, how much grant money will be awarded, current and past deadlines, Catalog of Federal Domestic Assistance (CFDA) numbers, and a sampling of similar government grants.

DARPA Learning with Less Labels LwLL - Machine Learning and Artificial ... Aug 15, 2018. Email this. DARPA Learning with Less Labels (LwLL) HR001118S0044. Abstract Due: August 21, 2018, 12:00 noon (ET) Proposal Due: October 2, 2018, 12:00 noon (ET) Proposers are highly encouraged to submit an abstract in advance of a proposal to minimize effort and reduce the potential expense of preparing an out of scope proposal.

Post a Comment for "45 learning with less labels"